In Part 1 of this post I dismissed the TB:FTE metric based on the fact that it does not measure what you think it is measuring. That, coupled with an analysis of some reasons for asking the question “what’s the typical TB:FTE ratio?” pretty much retires the question. In short, there is only one reason to use TB:FTE as a yardstick for storage management (or any other infrastructure) staffing: to track your own workload over time. It’s a terrible justification for hiring and firing. I shared the first half of a quote from Jerry Thornton-

Basing your storage FTE requirements on the amount of terabytes under management is like Starbucks hiring Baristas based on the number of coffee beans in their store room.

That TB:FTE is a metric utterly incapable of measuring and comparing labor efficiency was just the appetizer. The fact remains this is a surprisingly devilishly difficult number to even calculate properly if we are going to compare like with like IT shops. Difficult though it is, the challenge hints at a far more interesting question to answer than “how does my staffing compare with my competitors?”

That interesting question is “What is the right TB:FTE ratio for my organization?”

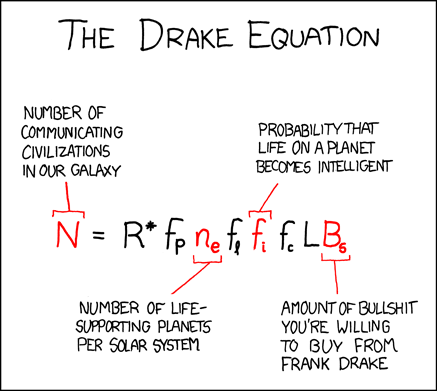

Replace TB with virtual machines, network ports or floor tiles for all I care; it’s the same thing, and complicated. To prove it, I direct you to the Drake Equation:

If you do not already follow Randall Munroe’s web comic XKCD, fix that deficiency and go there. Back to the article…

The Drake Equation is a rough attempt to estimate the number of potential intelligent civilizations in our galaxy. Francis Drake really just scratched this out as a thought experiment and depending on the values you plug in for each of his variables you can come up with some startling numbers. The problem is that any values you plug in are all just wild guesses; the “equation” itself is even just a guess. (Is it even really an equation if there is no + symbol in there?) We try not to manage IT infrastructure on a guess, however, so we need an equation. It looks startlingly similar to Drake’s.

To tell you how many people are needed to manage storage, servers, networks or anything else means understanding a LOT more about the specific client situation than just the raw measure of resources under management. Since I committed to remaining consistent and focus on storage, here is a stab at an actual formula where N=The number of full time storage administrators we need:

N = ((Rd*.1)*Na*Ntp*Nam*Nl*Nos*La*Sm*Sla)+1

The variable definitions:

1.) [Rd] Depth of Role: defines how much responsibility the storage admin has in managing disk. On a 1-10 scale, where 1 means only to the end of a fibre channel or Ethernet port and 10 means full-time system administration as well, including L1-L3 support needs. Some shops have a strict role definition for the infrastructure stacks, others blend responsibilities. We see more of the latter these days. IT shops on the high end demand more skills, experience and time.

2.) [Na] Number of Arrays: Call them arrays, blades, NAS hosts or external disks if you like. Every unique storage device I manage has a discrete cost in time for monitoring, reporting, upgrades, maintenance and management. Two arrays from two different manufacturers may require 3 management tools. This adds up. All other things being equal, fewer arrays are easier than more to manage.

3.) [Ntp] Number of distinct technology platforms: EMC alone has a full quiver. NTAP, Hitachi and IBM all have several flavors and that’s not even considering the plethora of niche players. Aggressive administration and engineering often require completely different sets of skills and experience. It is true that one person can carry the water for multiple technologies but if your procurement strategy looks like a Baskin Robbins’ menu you are going to need several experts and some more experts to back them up.

4.) [Nam] Number of access models in play: FC-SAN block, iSCSI, NAS and Object storage models all sound like apples to oranges comparisons because they are. Once I start mucking with my access models I have to consider network architecture, application compatibility and host connectivity. We cannot always simplify the stack, either, since demand cares not a whit about solution complexity. Object storage tends to be high latency, low performance but unlimited scalability. You want that to work with your traditional Fibre Channel SAN connected hosts? It can be done but it is not as simple as throwing a spare HBA at the system admin. New methods require new skills.

5.) [Nl] Number of locations: In the grand scheme of things, this is a big factor consistently overlooked. If you have two data centers, racks or communication closets the only time you get a pass on increased labor requirements is when the two doors are side by side. If I have to get in an elevator, that adds time. If I have to drive down the street, more time. If I have to fly between Napa and Los Angeles…you get the picture. Walking out the door always involves a labor cost and adds to your potential for down time in an emergency.

6.) [Nos] Number of distinct operating system types to support: Mainframe, HPUX, Linux, Windows, SCO (ha!), OS2 (I’m reaching here, aren’t I?). Every new host operating system adds complexity. Even O/S versions can add complexity; you know this to be true so why fight it? Your system administrators are probably well versed in managing multiple platforms but you still hire to their expertise in one or another.

7.) [La] Level of automation employed: Thin provisioning, automated storage tiering, auto-provisioning and virtual storage are all time savers. Every little bit helps eliminate some drudgery from the day to day. Implemented poorly, these tools actually ADD time to the workday. What happens when you deploy FAST-Cache for applications that do not play well in that sandbox? You just signed up for more performance management troubleshooting! Even better- your reporting tools don’t report on your specific virtualization implementation? Then you’ve got a LOT of manual reports to produce, collate and justify to everyone who disagrees with how you did it.

8.) [Sm] Support model: What is my typical workday and days per week for storage support? Have I outsourced or do I provide 100% in-house management? Are we on call? If so, are we on call for everything or just escalations? Staffing to this model is all about defining the depth of role and training the help desk to handle more of the call load.

9.) [Sla] Differentiated SLAs: Are there SLAs in place? Are they tiered? How many? Do I have an immediate escalation path for some applications? If I don’t have an SLA, do I have service level expectations (that will never be met, of course)? SLAs make things easier to manage, not harder. Undocumented expectations make management nearly impossible.

N = ((Rd*.1)*Na*Ntp*Nam*Nl*Nos*La*Sm*Sla)+1

The +1 is for your backfill. People do go on vacation from time to time, after all.

There are a minimum of 9 distinct variables for which to account and probably a few I missed. Some of the values are fractions that reduce your FTE requirements, some will be whole numbers that increase those needs. Keep in mind that these are just the variables you can control. As for those you can’t:

- What about the corporate culture? Is everything an escalation?

- Is your employee turnover rate high? Are you constantly training new hires?

- Is everything a one-off? Do your people document their tasks in a run book or procedure guide so everything is managed consistently?

- Do you get sued abnormally often? Do you have to constantly maintain a compliance archive, copy terabytes of e-mail and service the courts as much as your own applications?

- Do your applications hoard storage in fear of a shortage or the next data-pocalypse?

- Is your growth rate so massive that you employ a team of “rack and stack” folks just to keep installing new gear every week?

I’ll stop kicking the horse, now. You get the idea. Factors completely outside of IT can make infrastructure easier or more challenging to manage and they have a disproportionately large effect, compounding the problem of using this “simple” metric.

If you want to use a ratio of people versus infrastructure elements to gauge your efficiency, guide your hiring plan, or compare yourself to the next best company on the block you are probably out of luck. The differences between your organization and some other are likely to be far larger than the similarities. If you want to use that ratio to track your own efficiency over time, go for it. What you should be doing is examining ways to minimize unnecessary work. That means clear roles and responsibilities so Engineers and Architects can engineer and architect instead of handling administration. It requires you to define a service catalog, SLAs and standard infrastructure builds so deployments, support and capacity needs are consistent and predictable. You need to capture procedural run books for common day-to-day activities so more can be done by junior staff. Finally, explore the universe of automation and hands-off resource management like thin provisioning and automated tiering.

You don’t need a complex formula to optimize staffing. You need to adopt the mindset of a service provider. But- if you insist on a formula make sure it’s got a + in it. Or, just keep in mind the second half of Jerry Thornton’s quote:

“It’s what their customers want DONE with those beans that determines how many people Starbucks hires.”

Amen. A venti “red eye” for me, please. No room for cream.

Pingback: The art of the FTE and why it’s commonly misunderstood |

Good formula to consider all aspects to comeup with TB: FTE ratio

Now with new Trend of Thin provisioning Customer is asking to charge for only usable space.Though you have to manage there whole Storage Infrastructure (Arrays, SAN Switches and other admin activities) . How one can expect this for Onpremise Storage compare to Cloud Storage !!!